Let’s say that you have a portal application that is very data driven by highly related data, or that has some displays that require a lot of heavily related data to very flexibly dynamically build the controls on the pages. Leveraging the quick prototyping of MS CRM to design your schema, and managing this controlling data within MS CRM helps abstract away a lot of grunt work and very quickly get your portal online, but the time required to retrieve a long stream of related data by web services is affecting the performance of your website. Because your pages are very data driven depending on the user who logs in some of the website caching available out of the box isn’t helping much because of many web services calls required to customized the information.

What if you could cache all of this related data that you needed in an extremely quick and efficient way so that the portal could get the data that drives it in an nearly instant way?

In this example there is a MS CRM Online system and an interactive Dashboard displayed on a Portal. This is a simple example, but the real project contains a very large amount of data nested many levels deep. All the queries by web services would take well over a minute or two to run, but a typical portal user is not that patient.

Caching Mechanism

Advantages of this mechanism:

1. Requires a single select to a local database to fetch extremely complex relational data that is already organized into a consumable format.

2. Utilizes a background task to do the heavy lifting to keep things up to date without the portal user having to wait for a many web services calls to MS CRM and also allows for processing of the raw data if need be.

3. Can be part of a plan to allow a portal to be self sufficient for periods of time when the CRM system is not available.

Overview

Part 1: Filling the Cache

- Query data from MS CRM via web services in a background task. This could be a scheduled applications or service on a timer that keeps the cached updated.

- Put data into related serializable containers under one container with all of the information needed for a single account in the format needed to drive your application.

- Serialize this container

- Write it to the local SQL database as a single blob with an identifier.

Part 2: Reading the Cache:

- With a single select from the local database return the entire blob of information needed.

- Deserialize this blob

- Cast it to the Parent container class.

- Consume it in your Portal application.

Example Code

This example is very simplistic. The class structure and queries to pull MS CRM data can be as complex as needed. The real gains to using this are when there is a lot of related data requiring a large number of queries.

Nested Serialized Classes

All classes must be serializable. The top level container class will contain all other containers or lists of containers

using System;

using System.Collections.Generic;

using System.Data;

using System.Data.SqlClient;

using System.IO;

using System.Linq;

using System.Web;

using System.Xml;

using System.Xml.Serialization;

/// <summary>

/// Top Level Serializable Container

/// All classes contained must also be serializable

/// </summary>

[Serializable]

public class DashboardBlob

{

public Guid AccountId;

public DateTime LastUpdated;

public List<ContactS> students = new List<ContactS>();

}

/// <summary>

/// Nested Serializable class

/// </summary>

[Serializable]

public class ContactS

{

public Guid ContactId;

public string FirstName;

public string LastName;

public string EMailAddress1;

}

Filling the Serialized Classes

This can be done in any way that queries the data needed and fills the appropriate serialized classes.

/// <summary>

/// Create Top level container and call methods to fill all nested containers

/// </summary>

/// <param name="accountId"></param>

public static DashboardBlob FillDashboardBlob(Guid accountId)

{

var blob = new DashboardBlob

{

AccountId = accountId,

LastUpdated = DateTime.Now,

students = GetSerializedContactsByAccount(accountId)

//pull all other related data and nested data.

};

return blob;

}

/// <summary>

/// Query that pulls data from a CRM context and stores it in a serializable class

/// </summary>

/// <param name="accountId"></param>

/// <returns></returns>

public static List<ContactS> GetSerializedContactsByAccount(Guid accountId)

{

var serializedContacts =

(from c in DemoContext.ContactSet

join students in DemoContext.new_account_studentSet on c.ContactId equals students.contactid

where students.new_accountid == accountId

orderby c.LastName, c.FirstName

select new ContactS

{

ContactId = c.ContactId.Value,

FirstName = c.FirstName,

LastName = c.LastName,

EMailAddress1 = c.EMailAddress1

}).ToList();

return serializedContacts;

}

/// <summary>

/// Update that writes the blob back to SQL

/// </summary>

/// <param name="blob"></param>

/// <param name="connectionString"></param>

/// <param name="isCreate"></param>

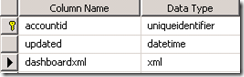

public static void SaveUpdateDashboardBlob(DashboardBlob blob, string connectionString, bool isCreate)

{

var bytes = SerializeAnObject(blob);

var connection = new SqlConnection(connectionString);

SqlCommand sqlCmd;

connection.Open();

if( isCreate)

sqlCmd = new SqlCommand("INSERT INTO dbo.MainCache(accountid,updated,dashboardxml) VALUES (@id,@updated,@xml)", connection);

else

sqlCmd = new SqlCommand("UPDATE dbo.MainCache Set updated=@updated, dashboardxml=@xml where accountid=@id", connection);

sqlCmd.Parameters.Add("@id", SqlDbType.UniqueIdentifier);

sqlCmd.Parameters["@id"].Value = blob.AccountId;

sqlCmd.Parameters.Add("@updated", SqlDbType.DateTime);

sqlCmd.Parameters["@updated"].Value = blob.LastUpdated;

sqlCmd.Parameters.Add("@xml", SqlDbType.Xml, Int32.MaxValue);

sqlCmd.Parameters["@xml"].Value = bytes;

sqlCmd.ExecuteNonQuery();

connection.Close();

}

public static string SerializeAnObject(object AnObject)

{

var Xml_Serializer = new XmlSerializer(AnObject.GetType());

var Writer = new StringWriter();

Xml_Serializer.Serialize(Writer, AnObject);

return Writer.ToString();

}

/// <summary>

/// Read the blob from local SQL database, deserialize it and cast it back to the container class.

/// </summary>

/// <param name="accountId"></param>

/// <param name="connectionString"></param>

/// <returns></returns>

public static DashboardBlob GetDashboardBlob(Guid accountId, string connectionString)

{

var connection = new SqlConnection(connectionString);

connection.Open();

var adapter = new SqlDataAdapter(string.Format("select dashboardxml from dbo.MainCache where accountid='{0}' ", accountId), connection);

var ds = new DataSet();

adapter.Fill(ds);

DataTable table = ds.Tables[0];

connection.Close();

if (table.Rows.Count != 0)

{

var data = (DashboardBlob)DeSerializeAnObject(table.Rows[0][0].ToString(), typeof(DashboardBlob));

return data;

}

return null;

}

public static Object DeSerializeAnObject(string xmlOfAnObject, Type objectType)

{

var strReader = new StringReader(xmlOfAnObject);

var xmlSerializer = new XmlSerializer(objectType);

var xmlReader = new XmlTextReader(strReader);

try

{

Object anObject = xmlSerializer.Deserialize(xmlReader);

return anObject;

}

finally

{

xmlReader.Close();

strReader.Close();

}

}

No comments:

Post a Comment